Claude Skills: Productivity Game-Changer or Cognitive (and Cyber) Threat?

Anthropic’s Claude generative AI model now has Agent Skills, virtual folders with instructions for specialised tasks. While this could boost productivity by automating repetitive tasks, it raises concerns about cognitive offloading and potential cybersecurity risks.

Summary

Anthropic’s Claude generative AI model now has Agent Skills, virtual folders with instructions for specialised tasks. While this could boost productivity by automating repetitive tasks, it raises concerns about cognitive offloading and potential cybersecurity risks. To mitigate these risks, only trusted Skills should be used for the completion of mundane tasks, and their activity closely monitored. This approach allows humans the opportunities to create and innovate.

Key Points

Claude’s New Skills: Anthropic’s Claude AI model now has Agent Skills, virtual folders with instructions for carrying out specialised tasks.

Potential Benefits: Claude Skills could boost productivity by automating repetitive tasks, freeing up humans for more creative and innovative work.

Potential Risks: This new feature raises concerns about cognitive offloading and the long-term impact on critical thinking skills. There are also cybersecurity risks, requiring careful monitoring and limiting use to trusted Skills only.

Cognitive Offloading Definition: Cognitive Offloading can be defined as the use of technology to handle mentally taxing tasks, reducing the amount of information held in working memory. Cognitive offloading can boost performance and efficiency in the short term. However, there is a concern that frequent cognitive offloading through the use of AI may reduce critical thinking skills due to less engagement in deeper thinking.

Table of Contents

Introduction

The robots are at it again!

In October Anthropic launched Agent Skills with the aim of helping their Claude generative AI model get even better at specialised tasks. That’s right folks: those repetitive admin tasks we all hate, like formatting data for a monthly report or writing product descriptions that fit our brand guidelines, might be things of the past! That’s what we all hoped or even expected AI would do for us, right? It would take on the tedious, mind-numbing tasks so we humans could spend more of our time doing the creative stuff. The fun stuff. I don’t just mean painting and writing poetry. I also mean running our businesses, innovating, strategising and building connections. That kind of fun stuff.

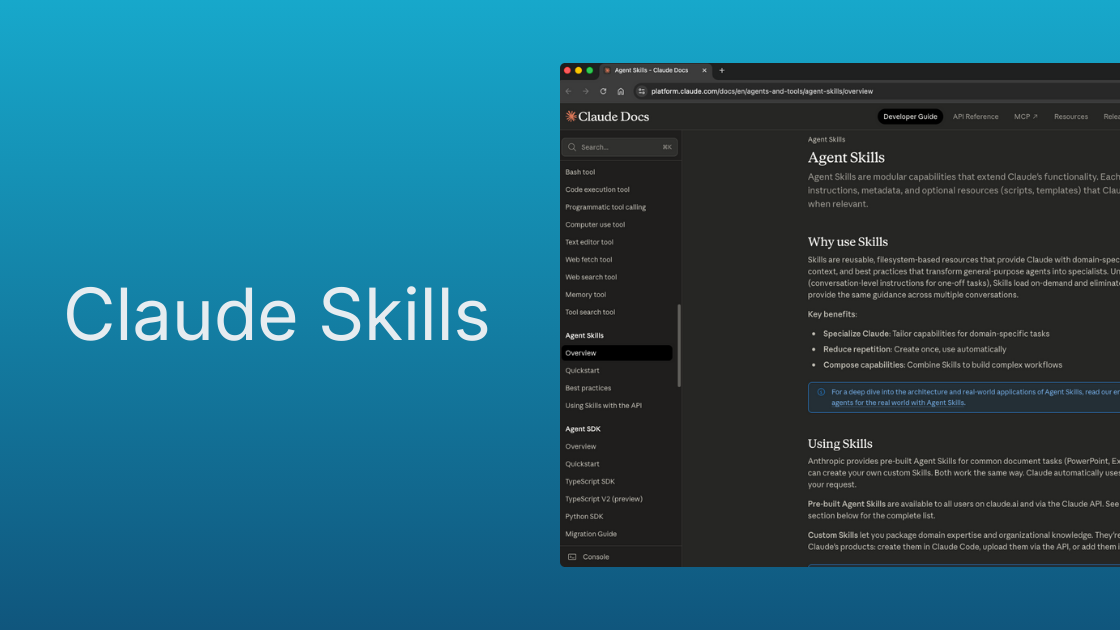

Anthropic’s engineers describe Skills as virtual folders containing instructions on how to perform those specific tasks. Instructions can include scripts, program files and other resources. In all fairness to Anthropic, the inner workings and thinking behind skills is well documented to help developers and end-users alike take advantage of this new feature and create their own.

Agent Skills: Specialized capabilities you can customize, from Anthropic.

Concerns

So what’s the problem with letting AI handle the boring stuff?

Cognitive Offloading

Using AI more extensively has the potential to increase cognitive offloading. Generally speaking, this is the use of any kind of technology to handle a mentally taxing task. Sticking a note to your fridge door to remind you to buy eggs or using a calculator instead of performing mental arithmetic both count as forms of cognitive offloading. I do it every time I ask Siri to remind me when it’s Bin Night. My local council has even created an Alexa skill to help residents remember when to put the bins out! By using these external devices and technologies we are reducing the amount of information we need to hold in working memory, reducing the cognitive load placed upon us.

“Alexa, when do I need to put the recycling out?”

Cognitive offloading can be extremely helpful in the short term. It can boost our performance and help us to get things done much more efficiently. Like all good things though, too much will rot your teeth! By not fully engaging with the task at hand, we’re not committing it to memory. We’re not learning from the experience. We’re not practicing and honing our skills. This could be a problem if we need to replicate the task in future and carry it out manually, without technological assistance. Similarly, if we’re interrupted part-way and need to resume a complex task at a later time or date, this will be much more difficult. Constructivist learning theory tells us that we construct knowledge from our experiences. Meaning that in order to truly master a skill, we need to get our hands dirty and give it a go for ourselves.

Looking back on my own working life, there are certain skills I picked up along the way that turned out to be quite transformative. Data analysis skills in particular. I acquired those through repeated practice. Trial and error. A lot of error. But once I had truly embedded them into my brain, I was able to apply them to a multitude of situations. If I was just starting out today and needed to compare two sets of data, chance are I too would ask an AI model to do it for me. But way back when in 2004, learning how to do a VLOOKUP in Excel opened my eyes to new ways of viewing data. Ultimately, it made me better at my job and improved my prospects.

I kid you not: my life changed the day I learned how to do a VLOOKUP.

Even more devastating, though, is the link between cognitive offloading and a reduction in critical thinking skills. A 2025 study found a significant negative correlation between the frequent use of AI and critical thinking skills, mediated by… (you guessed it): cognitive offloading. In practical terms, this means that the frequent use of AI tools allows less of an opportunity to engage in deeper thinking. So over time, the skill might eventually be lost. Critical thinking is essential for analysing information, assessing situations and constructing entirely new ways of solving problems. Surely, that’s a skill humanity needs to hold on to?

Risk of Ransomware

The convenience of Claude Skills is also their Achilles heel: they only need to be given consent once. A skill that is marked as trusted is able to carry out any actions it wants and run any code it wants. All this is completely invisible to the user.

Don’t get me wrong. Automation isn’t bad. I’m a huge advocate of automation!

Harnessing technology to streamline repetitive, mindless tasks is an excellent way to squeeze every ounce of productivity out of your day. It’s efficient. The simplest of technological interventions, like using a password manager to automatically log you into a web app, or a mailbox rule to delete all emails from Barry in Accounts, can give us back precious seconds of our day. That’s as satisfying as it is efficient.

The danger, though, is automating without control. Giving AI agents, like Claude, the permission to run processes, download files and make decisions without our express knowledge or intervention, spells danger. Either maliciously (by bad actors) or carelessly (by naive actors), Skills with sufficient permissions can become hacking tools. Quietly working away in the background, but also in the blink of an eye, a Skill can become a malware vector. All it takes is for a single user to give permission for a compromised Skill to run, and an entire organisation is under threat.

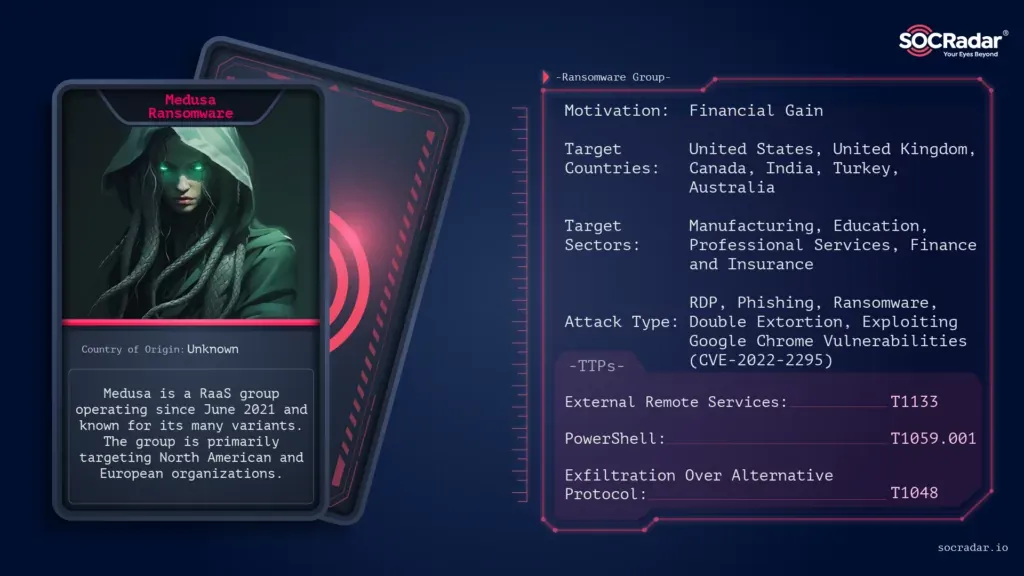

Researchers at Cato CTRL decided to put these concerns to the test. Within the safety of a sandboxed environment, they were able to use a seemingly harmless Gif-Generator Skill, to execute a MedusaLocker ransomware attack. And nobody wants that with their morning cup of tea! Not too dissimilarly to her mythological counterpart, MedusaLocker turns digital systems to metaphorical stone by holding their data hostage.

Conclusion

So, what’s the moral of the story?

Claude Agent Skills have the potential to be a powerful tool for task automation. However, before adding them to our tech stack, we need to be mindful of the risks, both from a cognitive and a cybersecurity perspective.

There are steps we can take, though, to help manage those risks:

- Only use trusted Skills

- Monitor Skill activity

- Use Skills that add value

Skills that have been shared online (e.g. on social media or various repositories) are best avoided. There is a high chance that they might have been tampered with or even created for the purpose of performing malicious tasks without our knowledge. Closely monitoring the activity of any Skills we have implemented is key for make sure they are, in fact, doing what we expect them to be doing. And nothing more! Finally, we should aim to only use Skills that truly add value. Those should be Skills that handle the mundane and give us back our precious time to create and innovate.